Paho MQTT Client (Javascript), Envoy, VerneMQ: debugging disconnects

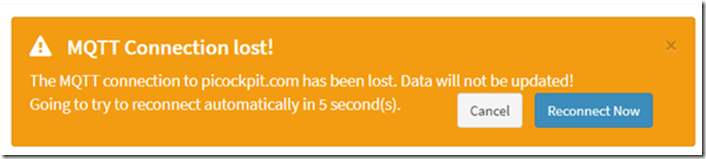

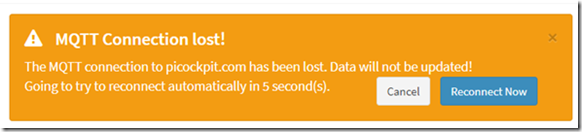

After deploying picockpit to my server, a bug was observed: the web frontend would disconnect in very regular intervals.

This had not been present on the local development environment. Users of picockpit reported the same bug.

I have debugged it today, and applied a hotfix. It turns out it was a timing issue.

TL;DR how to fix this

set up a shorter keepAliveInterval for Paho. 60 seconds is the default, but with network latencies this will lead to these timeouts.

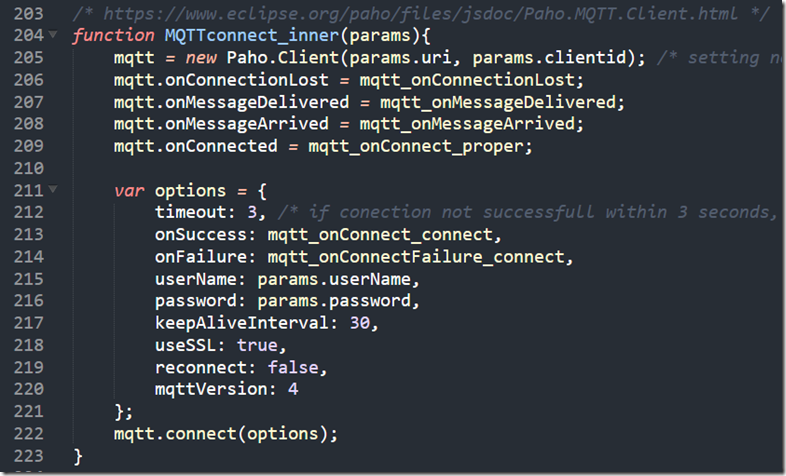

Here is my code for the connection (inside pcp-code.js which is loaded by the webpage):

/* https://www.eclipse.org/paho/files/jsdoc/Paho.MQTT.Client.html */

function MQTTconnect_inner(params){

mqtt = new Paho.Client(params.uri, params.clientid); /* setting not required parameters as JS does not allow to pass named positional parameters */

mqtt.onConnectionLost = mqtt_onConnectionLost;

mqtt.onMessageDelivered = mqtt_onMessageDelivered;

mqtt.onMessageArrived = mqtt_onMessageArrived;

mqtt.onConnected = mqtt_onConnect_proper;var options = {

timeout: 3, /* if conection not successfull within 3 seconds, it is deemed to have failed */

onSuccess: mqtt_onConnect_connect,

onFailure: mqtt_onConnectFailure_connect,

userName: params.userName,

password: params.password,

keepAliveInterval: 30,

useSSL: true,

reconnect: false,

mqttVersion: 4

};

mqtt.connect(options);

}

(reproduced as image as well)

Debugging steps

Timing

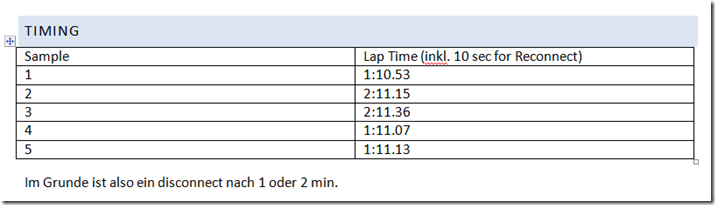

I sat down with my mobile phone to time the timeouts, and see whether there was a pattern there.

In my implementation, Paho is reconnected by surrounding JavaScript code every 10 seconds on timeout.

We see the Paho javascript MQTT client loosing the connection every minute or every two minutes. This is already a strong indication that this is systematic, possibly a forced disconnect by the server.

JavaScript console

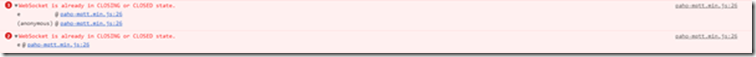

The error message I see in the console is:

WebSocket is already in CLOSING or CLOSED state.

In retrospect, this is possibly Paho trying to send the PINGREQ but failing just by a couple of seconds or possibly microseconds, as the server has disconnected the socket.

NB: For superior compatibility with company firewalls, etc. I run Paho through websockets.

Verification with other browser

I have seen this issue with Chrome (my main browser) and Firefox. Therefore it is not due to a browser problem.

(I was led to believe that it might be to some browser issues by this website: https://github.com/socketio/socket.io/issues/3259)

Debugging VerneMQ

see also:

- https://pi3g.com/2019/08/08/vernemq-how-to-disconnect-clients-forceably-per-command-line/

- https://docs.vernemq.com/administration/managing-sessions

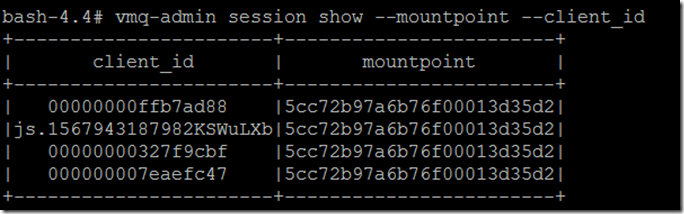

Show full client_id and mountpoint to avoid truncation:

vmq-admin session show –mountpoint –client_id

Run a trace:

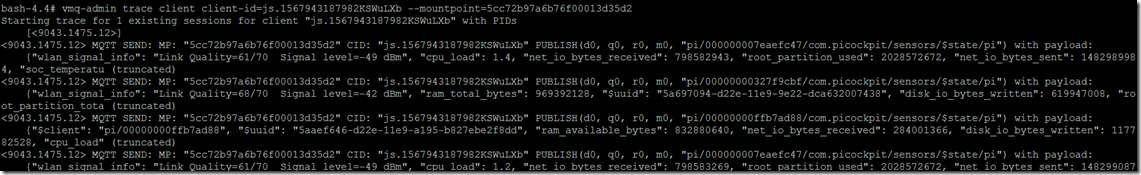

vmq-admin trace client client-id=js.1567943187982KSWuLXb –mountpoint=5cc72b97a6b76f00013d35d2

As you see, you will get a trace of what is sent to the client and received from the client.

Starting trace for 1 existing sessions for client “js.1567943187982KSWuLXb” with PIDs

[<9043.1475.12>]

<9043.1475.12> MQTT SEND: MP: “5cc72b97a6b76f00013d35d2” CID: “js.1567943187982KSWuLXb” PUBLISH(d0, q0, r0, m0, “pi/000000007eaefc47/com.picockpit/sensors/$state/pi”) with payload:

{“wlan_signal_info”: “Link Quality=61/70 Signal level=-49 dBm”, “cpu_load”: 1.4, “net_io_bytes_received”: 798582943, “root_partition_used”: 2028572672, “net_io_bytes_sent”: 1482989984, “soc_temperatu (truncated)

I also saw a PINGREQ() and PINGRESP(), keeping the session alive in this trace:

<9043.1475.12> MQTT RECV: MP: “5cc72b97a6b76f00013d35d2” CID: “js.1567943187982KSWuLXb” PINGREQ()

<9043.1475.12> MQTT SEND: MP: “5cc72b97a6b76f00013d35d2” CID: “js.1567943187982KSWuLXb” PINGRESP()

Here is how the disconnect looks like:

<9043.1475.12> MQTT SEND: MP: “5cc72b97a6b76f00013d35d2” CID: “js.1567943187982KSWuLXb” PUBLISH(d0, q0, r0, m0, “pi/00000000327f9cbf/com.picockpit/sensors/$state/pi”) with payload:

{“wlan_signal_info”: “Link Quality=68/70 Signal level=-42 dBm”, “ram_total_bytes”: 969392128, “$uuid”: “928ca964-d22e-11e9-9e22-dca632007438”, “disk_io_bytes_written”: 619947008, “root_partition_tota (truncated)

<9043.1475.12> Trace session for js.1567943187982KSWuLXb stopped

Unfortunately there is no information that this is due to a timeout waiting for a pingreq() from the client.

Debugging Envoy

See also:

As there was no information that this is due to a pingreq timeout, suspicion fell upon envoy disconnecting due to timeouts (possibly handling websockets incorrectly due to me not setting some config properly).

The configuration was, however, applied properly:

upgrade_configs:

– upgrade_type: “websocket”

enabled: true

for the correct path (/mqtt)

There are no timeouts apart from connect_timeout for the backends (which were set to different values, surely not a minute).

To enable debug output from envoy, you have to add an environment variable to the docker-compose.yaml:

environment:

loglevel: debug

as the last lines in the stanza for the service envoy

Here’s what I see as trace:

‘:status’, ‘101’

‘connection’, ‘Upgrade’

‘date’, ‘Sun, 08 Sep 2019 12:08:36 GMT’

‘sec-websocket-accept’, ‘mWAEi6p3RBFflHkNbxTMbnhaG4I=’

‘sec-websocket-extensions’, ‘permessage-deflate; client_max_window_bits=15’

‘sec-websocket-protocol’, ‘mqtt’

‘server’, ‘envoy’

‘upgrade’, ‘websocket’

‘content-length’, ‘0’

[2019-09-08 12:08:38.757][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:08:43.760][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:08:48.755][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:08:53.759][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:08:58.762][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:03.762][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:08.762][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:13.765][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:18.766][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:23.766][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:28.765][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:33.766][1][debug][main] [source/server/server.cc:170] flushing stats

[2019-09-08 12:09:37.190][26][debug][connection] [source/common/network/connection_impl.cc:520] [C9] remote Close

[2019-09-08 12:09:37.190][26][debug][connection] [source/common/network/connection_impl.cc:190] [C9] closing socket: 0

[2019-09-08 12:09:37.190][26][debug][client] [source/common/http/codec_client.cc:82] [C9] disconnect. resetting 1 pending requests

[2019-09-08 12:09:37.190][26][debug][client] [source/common/http/codec_client.cc:105] [C9] request reset

[2019-09-08 12:09:37.190][26][debug][router] [source/common/router/router.cc:868] [C8][S10834556124828578161] upstream reset: reset reason connection termination

[2019-09-08 12:09:37.190][26][debug][http] [source/common/http/conn_manager_impl.cc:187] [C8][S10834556124828578161] doEndStream() resetting stream

[2019-09-08 12:09:37.191][26][debug][http] [source/common/http/conn_manager_impl.cc:1596] [C8][S10834556124828578161] stream reset

[2019-09-08 12:09:37.191][26][debug][connection] [source/common/network/connection_impl.cc:101] [C8] closing data_to_write=0 type=2

[2019-09-08 12:09:37.191][26][debug][connection] [source/common/network/connection_impl.cc:653] [C8] setting delayed close timer with timeout 1000 ms

[2019-09-08 12:09:37.191][26][debug][pool] [source/common/http/http1/conn_pool.cc:129] [C9] client disconnected, failure reason:

[2019-09-08 12:09:38.192][26][debug][connection] [source/common/network/connection_impl.cc:642] [C8] triggered delayed close

[2019-09-08 12:09:38.192][26][debug][connection] [source/common/network/connection_impl.cc:190] [C8] closing socket: 1

[2019-09-08 12:09:38.192][26][debug][connection] [source/extensions/transport_sockets/tls/ssl_socket.cc:270] [C8] SSL shutdown: rc=0

[2019-09-08 12:09:38.192][26][debug][connection] [source/extensions/transport_sockets/tls/ssl_socket.cc:201] [C8]

[2019-09-08 12:09:38.193][26][debug][main] [source/server/connection_handler_impl.cc:80] [C8] adding to cleanup list

[2019-09-08 12:09:38.767][1][debug][main] [source/server/server.cc:170] flushing stats

This coincides with the disconnect on the client

As you can see, this is initiated by

[C9] remote Close

This again throws suspicion back upon VerneMQ disconnecting the client due to a timeout issue.

Paho Timing options

A good source for reading about MQTT and keep-alives is Steve’s Internet Guide:

The client must send keepalive packets (PINGREQ), in the absence of other control packets, and is responsible for the interval between the packets not exceeding the Keep Alive value set on the broker.

The client can send the packets earlier

The next step of the investigation lead to looking at Paho and the keepalive. My initial concern was that Paho would not send keepalives by itself, so that I would have to run a loop and send them.

This, however, was found not to be true – Paho actually handles the keepalives itself.

Here’s the source code of Paho MQTT Javascript:

line 703 and following have the “Pinger”.

it defines a doTimeout function, which in turn returns doPing

doPing is defined a bit further below, it runs the actual ping, and sets up a timeout, with this._keepAliveInterval.

this._client.socket.send(pingReq);

this.timeout = setTimeout(doTimeout(this), this._keepAliveInterval);

By now, I am pretty certain that the keepAliveInterval has to be decreased for Paho from the default 60 seconds. Possibly due to network latencies, what worked for me locally does not work remotely any more.

how do we set up a different time interval?

Paho documentation:

The Paho client instance has a method connect, which takes an object as its single parameter.

The object has different attributes, one of them being the keepAliveInterval:

I set this keepAliveInterval to 30 sec. Here’s the current set of parameters I use:

var options = {

timeout: 3, /* if conection not successfull within 3 seconds, it is deemed to have failed */

onSuccess: mqtt_onConnect_connect,

onFailure: mqtt_onConnectFailure_connect,

userName: params.userName,

password: params.password,

keepAliveInterval: 30,

useSSL: true,

reconnect: false,

mqttVersion: 4

};

mqtt.connect(options);

With mqtt being an instance of the Paho MQTT Client.